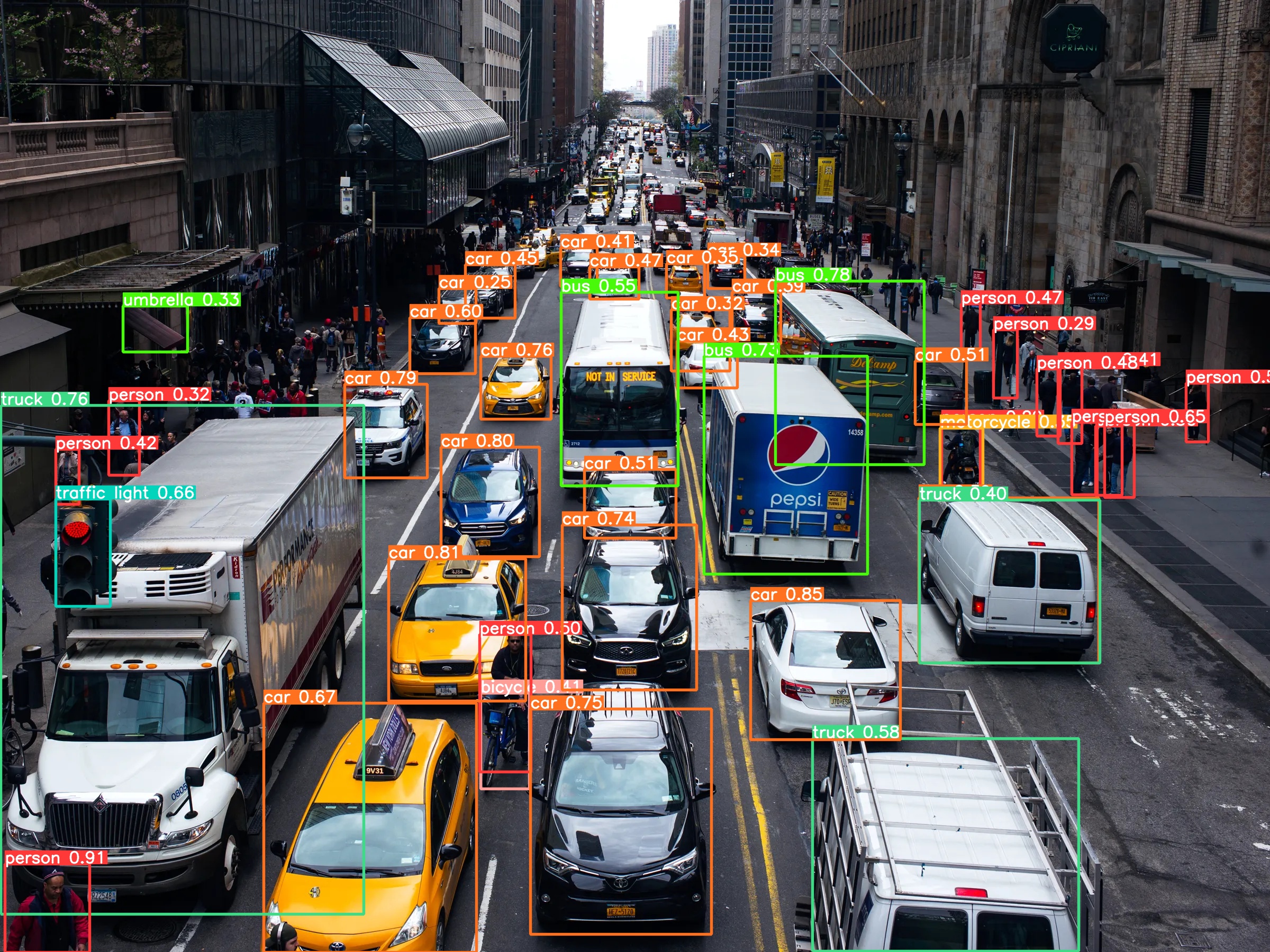

Object detection is a fundamental task in computer vision, enabling machines to identify and locate various objects within an image or video. Over the years, numerous algorithms have been developed to tackle this challenge, and one notable breakthrough is the YOLO (You Only Look Once) series. Among them, YOLO v5 has gained significant attention and praise for its exceptional speed and accuracy in object detection.

Introduced by Alexey Bochkovskiy, the YOLO v5 algorithm represents a major advancement in the field of object detection. It builds upon the success of its predecessors, YOLO v1, v2, v3, and v4, while introducing several key improvements that make it a remarkable tool for real-time object detection.

Speed has always been a crucial factor in object detection, particularly in scenarios where low-latency predictions are essential, such as autonomous driving, robotics, and surveillance. YOLO v5 excels in this aspect by significantly enhancing the processing speed without sacrificing accuracy. It achieves this through a streamlined architecture and efficient implementation.

One of the primary enhancements in YOLO v5 is the introduction of a new architecture called "Scaled-YOLOv4." This architecture utilizes a smaller, more lightweight model that can be easily deployed on resource-constrained devices, including embedded systems and edge devices. Scaled-YOLOv4 strikes a balance between speed and accuracy, enabling real-time object detection on devices with limited computational power.

YOLO v5 also adopts a novel approach called "anchor-free" object detection. Unlike previous versions that relied on predefined anchor boxes to localize objects, YOLO v5 dispenses with anchors and directly predicts the bounding boxes and object classes. This simplification not only reduces computational overhead but also improves detection accuracy, especially for small objects and densely packed scenes.

Moreover, YOLO v5 incorporates a powerful data augmentation technique known as "Mosaic data augmentation." This method combines four training images into a single mosaic image, enhancing the model's ability to learn contextual relationships between objects. By leveraging Mosaic data augmentation, YOLO v5 achieves better generalization and robustness, leading to improved detection performance in real-world scenarios.

Another significant feature of YOLO v5 is its user-friendly and flexible nature. It provides a user-friendly API and a wide range of pre-trained models, enabling researchers and developers to quickly integrate the algorithm into their projects. Additionally, YOLO v5 offers a customizable architecture that allows users to adapt the model to their specific requirements. This flexibility makes it an ideal choice for both research and industry applications.

The success of YOLO v5 can be attributed to its exceptional performance on popular object detection benchmarks. In various benchmark tests, YOLO v5 outperforms many state-of-the-art object detection algorithms in terms of both accuracy and speed. It achieves impressive mAP (mean average precision) scores while maintaining real-time inference speeds, making it an unparalleled solution for numerous real-world applications.

In conclusion, YOLO v5 represents a significant milestone in the field of object detection. With its remarkable speed and accuracy, streamlined architecture, anchor-free approach, powerful data augmentation, and user-friendly nature, YOLO v5 has revolutionized the way objects are detected in images and videos. Whether it's autonomous vehicles, surveillance systems, or robotics, YOLO v5 has proven to be a game-changer, pushing the boundaries of what is possible in real-time object detection. As the field continues to evolve, it will be fascinating to see how YOLO v5 evolves further and contributes to future advancements in computer vision.

peoples also search

YOLO v5 Real-time object detection Speed and accuracy Object localization Scaled-YOLOv4 Anchor-free object detection Mosaic data augmentation User-friendly API Pre-trained models Customizable architecture Computer vision Autonomous driving Robotics Surveillance systems Edge computing

Post a Comment